The reason I first got into making glitch art was whilst experimenting with converting cheap digital cameras to near infra red ( I was still painting then and trying to find new ways of looking at the world) I h found a really really cheap camera given away in a company promotion and having taken a few photographs with it I downloaded the images onto my laptop and noticed that they were in a strange image format called ppm and when I opened them up some of them were really mangled . ( below is what I consider to be the very first glitch ever made by me )

And from there I started to discover circuit-bending ( the art of making devices malfunction in creative ways ) and through a designer friend ( Thanks Claire Penny) the work of Philip Stearns and specifically his 2012 ' Year of the Glitch' where he takes early Kodak digital cameras and rewires them creatively and records what he does. You can find more about this here -

https://phillipstearns.wordpress.com/tag/year-of-the-glitch/page/2/And also The work of Rheed Ghazala , considered to be the father of circuitbending. Read more about his work and ideas and safe ways and methods of circuit-bending which I should state for safety reasons NEVER CIRCUIT BEND A DEVICE DIRECTLY CONNECTED TO MAINS ELECTRICITY - here

Anti-Theory

From there I started to Modify my own cameras based on researching Philip Stearns and Rheed Ghazala and a few other online resources.

And started to film with the results of my experiments for instance this :

The theory and practice of what I'm doing is deceptively simple and largely a process of trial and error and parallels what I'm doing with files through hex editing and data-moshing and one other method I'll discuss further on, but in some ways is more satisfying as I can achieve effects and textures which are uniquely mine .

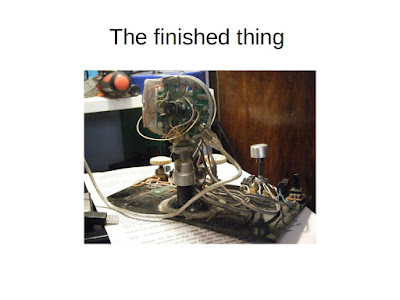

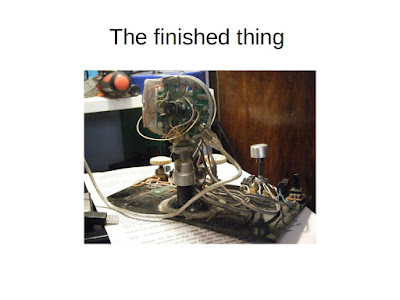

How do we do this? First find a camera , preferably a cheap old webcam, newer webcams are a lot harder to bend due to the change from large discrete ccds to system on chips which roll the ccd and a few other components into a whole package, though there are ways around it which involve soldering onto or bending pins on the image processing chip. Open the camera up, remove the lense to expose the image sensor, attach webcam to pc and open up whatever webcam viewing software you are using ( so that you see the bends as they happen) , then take short lengths of wire and short circuit between points on the ccd. Note or mark where the best bends are and which ones to avoid , as some bends will either freeze the camera meaning you will have to disconnect and reconnet the usb connector and restart the webcam software , other times you might get a blue screen and restart on windows or crash and restart on Linux . As you can see from the previous picture of the camera I'll then solder onto these points and then attach potentiometers so i can control how much of a bend I want . Eventually I'll mount them so they are more usable and less fragile - like this.

Then I Point them at a source and adjust the pots until I get what I like - a lot of the time this means pointing at a crt monitor ( crt works better than lcd/led , crisper colours and no backlight glare) which is playing back video or TV or using an operating system which has flaws in the way it plays back video ( I never really just use one process)

Go go preaching - (worlds end)

This is made with a different camera to the previous video, one of the things about circuit bending is that you get a different look and feel depending on the camera and lens which leads to some unique images which you just can't get with say hex editing or data-moshing alone, regardless of which codec you use so its a good way of getting something that looks different. When everyone uses the same techniques and codecs and methods things can begin to look a little samey, though I could equally say that about painting or other old media , it's how you use it that matters, but circuit bent cameras become uniquely yours .

The sound on this is also by me, made with a circuit bent casio tone pt1 and a diy ring modulator all put together in Audacity I'm always playing around with ideas and I was just interested to see if that would work.I have an uneasy relationship with sound preferring usually to salvage sound from the brokenness of the file, the artifacts that hex editing can create from a file ( but I'll talk about that more in the next post ) .

We could also run the circuit bent camera through processing , but Ill leave that for another day as I've covered some of those techniques elsewhere.

Circuitbending graphics cards.

I've also been experimenting with bending older graphics cards with mixed results. So far I've been using older pci and agp graphics cards such as an old pci avance logic ( actually one of the best so far) and early ati agp cards - though this method is riskier and can lead to the computer shutting down and causing damage to the card or the computer - I avoid the actual graphics chip and concentrate on the ram chips and short-circuit those and try to capture the output with my vga to composite adaptor and trusty pci pinnacle pc tv rave .

Circuitbending a graphics card

Or this in black and white

I also look for damaged cards ( Avance logic)

Some damaged cards can also have some interesting effects, though they can be tricky to work with as its often hard to get an operating system up and running when you cant see the prompts or instructions onscreen due to artifacting . This is my favourite damaged card so far though ( the only info on the card is the main graphics chip which reads as an Avance logic inc ALG2302.A)

Don't just use one technique

As I say, I don't just use one technique or method, I will often use a combination of circuitbending, hex-editing, datamoshing, and a newer ( and at the moment primary ) area of research for me misinterpretation through operating system flaws in video playback. This started when I was trying out an operating system called Legacy OS 2017 .

Using old computers and Legacy OS 2017

I have a thing about using old computers in my work, mainly through necessity, I'm sure that's true for most of us, I would love the latest 64 core 128 thread Ryzen , but I just can't afford it so most of my work is made on low end machines. Too see how low end I could go and still make glitch art I happened upon a version of puppy linux called legacy os which was optimised to run on pre pentium 4 machines , it worked reasonably well on a pentium 3 with a small ( when I say small I'm talking about 128mb to 256mb ) amount of ram and a modest graphics card. I also use legacy os to test out machines or check the contents of hard drives, like a lot of Linux distros it will boot from cdrom without the need to install it .

So one day I was testing out a computer and it had this agp graphics card in it, a radeon hd 3650, and one of my tests when I'm checking out a machine is to look at what video playback is like ( is it fluid , choppy etc) and i was playing back a video in a video player that I spotted called quickplay ( which I have never found in any other distro but this ) when something like this happened( I can't remember what the original footage was , I think me wondering around the farmyard where i used to live I think )

The beast in action

And it kept on happening, it was reproducible, if I played back video on this machine with this graphics card

Skullduggery

I tried different graphics cards with the same operating system and the same video and the same video player but no, it had to be this one graphics card and then only some files would actually play mainly mpeg 4, h261, mpeg1/2 and libxvid and other stranger file formats like magicyuv.

Key points

1) Run legacy os in live mode from an ide cdrom ( it doesn't like sata)

2) Graphics card must be either an agp Radeon Hd3650 , or as I later found a pci-e radeon x300se ( found in a lot of pentium d era dell dimensions ) or a HD 5000 series or HD 7750 ( though I also later discovered if at start up when choosing the display manager you choose xvesa instead of xorg you can replicate some of the effects seen with a radeon card )

Choose your display manager

As I later found it when setting up legacy os at startup you get to choose the display manager , in both xorg and xvesa there is an option to choose either 16 bit or 24 bit colour and that can also give interesting results when using quickplay :

Video playback in quickplay running in 16bit colour

Output is obviously dependent on input - so choice of codec and container matter, for instance this is the same setup as before running xorg in 24bit colour but trying and failing to play a file encoded to h264 in an m4v wrapper encoded by handbrake.

Interestingly many of these faults do not work if you install the operating system to a physical hard drive , especially those found in linux mint bea 2.1 and legacy os 4 mini ( the newer version of legacy os 2017 ) .

Older operating systems can be exploited for glitch art

Older operating systems can be exploited - especially those based on older linux kernels ( 2.6.32 and lower) that have incomplete or incorrect support for certain video cards and / or incomplete support for newer codecs such as H264 and cavs. This can be exploited if we have a supply of older motherboards and a basic knowledge of how to put together a desktop computer plus a supply of older video cards to test , often giving us surprising and unique faults , allied with older versions of vlc and other mediaplayers such as gnome-mplayer. A source for old versions of linux can be found here

old versions of linux

The Video below shows how by feeding a tailored mp4 file to kmplayer in legacy os 4 mini the display can be corrupted and subsequent files played back will retain that corruption - I've recently found a more elegant version of this in Linuxmint bea 2.1 from 2006 which does not rely on this method.

Xorg corruption in legacy os 4 mini

This fault also relies on having any of the Radeon cards already outlined and it looks a little like this ( the display is corrupted right from start up and remains like this throughout playback even if we exit and re-enter the display by restarting it with ctrl alt backspace , unlike legacy os 4 mini which requires that you play the tailored file again ) .

Video playback in linuxmint bea 2.1

The computer this is being played on is also very modest - and old socket 754 winfast from 2005 running an AMD 64 sempron 3000 and 1gb of ram with an Ati radeon hd3650. And to me this is a much more elegant and useful corruption , though the corruption does vary depending on which ati card you use - contrast the above with the video below which was on a different motherboard but with the same card the motherboard was an msi socket 775 rocking an old Pentium 4 with hyper-threading

Error must be repeatable to be useful

A lot of these findings come from my research over the last year into installing and using different Linux distributions, having had a hint of what might be possible through finding the first errors in Legacy OS 2017, these distributions have so far been the most fertile ground for finding errors in video playback due to a combination of buggy hardware support for newer Ati cards and buggy support for codecs in media players. I've documented this research elsewhere ( in the talk given at last years Fubar and in earlier blog posts) and I don't want to repeat that too much as I'm just trying to give and overview rather than a detailed walk through but below are two of my favourite errors, both repeatable and neither relying on specific hardware or cards , that is also something I feel is important, if I find and error I feel that it must be repeatable to be useful .

H264 playback in VLC on Ubuntu 6.06

This one came as a surprise. I have a series of videos in different formats ready to play on an external hard drive just for this purpose and I loaded up a H264 file into VLC and this happened , the beautiful sliding from right to left which is similar to pixel sorting but more fluid.

The next error was completely unexpected. Before I discovered using cheese webcam booth and later obs-studio as my main capture software I would often use gtk-recordmydesktop to record the output of the malfunctions of whatever OS I was testing at the time running through tvtime viewer which showed the output of the vga to composite adaptor I was using. Now, gtk-recordmydesktop records in ogv format, which has been a common standard for video on the Linux desktop since 2005/6 ? I'm not sure of the exact date but you would think there would be some element of backward compatability but as I discovered newer recordings of ogv in modern versions of Linux played back on older distributions turn into this beautiful Hot mess

Ogv playback in Gnome-mplayer on Ubuntu 10.04

The next blog post in this series will cover sound.